The Virtual Embodiment Lab in the Cornell Communication Department studies embodiment and presence in virtual environments.

We ask how the way you see your own and others’ actions represented affects how you understand yourself, others, and your environment. We also explore how changing this representation can change people’s perceptions and allow them to collaborate, learn, and experience in ways that they can’t in the real world.

Current Projects

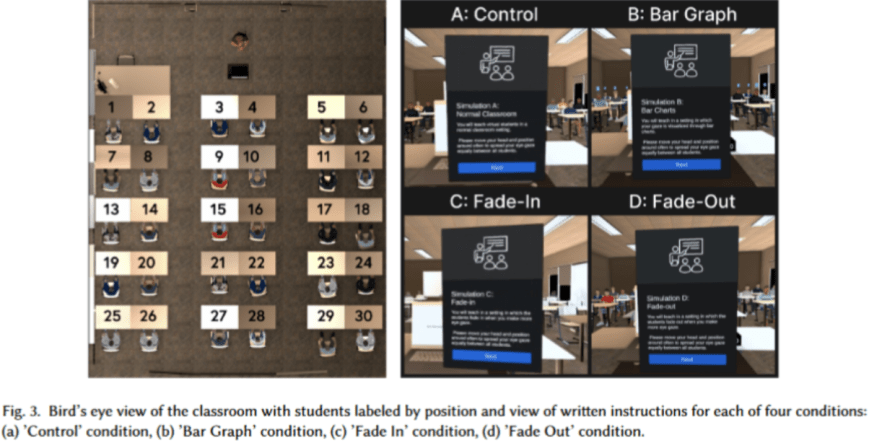

Project 1: NSF: EAGER: A Training Tool to Help Teachers Recognize and Reduce Bias in Their Classroom Behaviors and Increase Interpersonal Competence

Research shows that poor nonverbal communication can harm teachers’ ability to manage their classrooms and effectively engage with their students. In this project, we track teachers’ movements both in virtual and physical classrooms, assess their nonverbal behaviors, and explore what kinds of XR tools are most helpful for self-reflection.

Project 2: NSF HCC: Improving collaboration in remote teams through tools to promote mutual understanding of nonverbal behavior.

This project applies VR to a virtual teamwork context to improve teams’ abilities to communicate remotely and collaborate. The study focuses on studying nonverbal communication in teams, compared to a face-to-face setting, and the specific skill sets of the members in this communication process. Within these VR environments, this project analyzes team member’s nonverbal behaviors and how they are interpreted and understood within the team, comparing VR and face-to-face interactions. Further, the study also investigates how users manage their avatars’ presentations in team contexts. As part of outreach for this project, lab members Stephanie Belina and Isabelle McLeod Daphis are leading a project class for students at Lehman Alternative Community School.

Project 3: NSF Convergence Accelerator Track H: Making Virtual Reality Meetings Accessible to Knowledge Workers with Visual Impairments

This project explores how VR in remote work cases can be expanded to be more accessible to those with visual impairment. The study investigates how nonverbal cues such as proximity, gestures, and gaze can be communicated to those who are blind. The focus of this study is centered on user experience and evidence-based guidelines, showing how VR can support workers, employers, and researchers. Below, we show some prototypes that have been created to explore the accessibility features.

Project 4: ONR LBAA Close-Range Collaboration in Diver-Agent Teams Using Diver Nonverbal Behavior as Cues for Safe Human-Agent Communication in Multiple Modalities

This project explores the use of VR to facilitate the partnership between a human diver and an underwater autonomous vehicle to increase safety in diving and harness strengths/mitigate the deficiencies of human partners. UAVs have the potential to serve in place of a human as a mixed robot team to support divers with cognitive/sensory impairment. In this project, in collaboration with co-PIs Shiri Azenkot (Enhancing Abilities Lab) and Silvia Ferrari (RealTHASC), our team will develop simulated environments representing human divers in a variety of conditions in order to design ways to facilitate an effective team between the UAV and diver, including using haptic communication channels in challenging environments and under conditions of diver impairment.

RealTHASC’s framework for human-robot teamwork simulations

Project 5: Identifying and Predicting Inflection Points in Human-Agent Action Teams Using Relational Event Modeling

This project aims to investigate how human-agent teams navigate transitions and to identify interventions to further team success in virtual and physical environments. Using controlled environments, this study explores unanticipated transitions internally and externally in teams including how to predict and mitigate them. The study will be conducted in an experimental setting with differing contexts and task difficulty to explore interactions within the team, triggering an event that leads to a disruption of team dynamics and observing the resulting behavior/seeing how the team recovers. In order to identify inflection points, the study will use verbal, nonverbal, and functional neuroimaging data.

Project 6: Social VR for Older Adult Trauma Patients

Older adults account for 25% of hospital trauma patients. These patients experience high rates of pain and are commonly prescribed opioids. Virtual reality has a high potential to reduce pain medication use as past research notes that the immersive qualities of VR reduced pain in hospitalized patients. Further, social virtual reality, where users can play games, talk, and embrace, and reduces loneliness and is shown to increase well-being and social support.

This project is the first of its kind to look at SVR to address pain in older hospitalized patients and is conducted in collaboration with Weill Cornell Medical Trauma Centers.

The study will first look at the applicability and acceptance of SVR for hospitalized patients, their friends, and family. It will explore the additional benefits SVR provides to hospitalized patients compared to traditional video calling. SVR facilitates a higher sense of presence which may be more effective in reducing pain and distracting the patient from the hospital setting. We are currently conducting a pilot study regarding the feasibility and acceptance of its use from patients and their extended friends and family.

Past Projects

Companionship

We are following up our recent study studying the effects of social support in virtual environments on pain perception, with a second study in which participants bring in a friend or family member to interact with in different virtual environments. You can sign up to participate here!

Moon Phases

In a collaboration with the Cornell Physics Department. Together we built a virtual environment in which students can learn about moon phases in an interactive space. The environment situates students above the north pole or far above the earth as they grab the moon and move it around themselves while watching it change phases. Throughout this experience, students are quizzed as they interact and learn the material.

The graduate student leads for this project were Byungdoo Kim, Jack Madden, Swati Pandita, and Yilu Sun

Read the full paper here!

Idea Generation

This project by VHIL alumna Yilu Sun explored nonverbal behavior during competitive and collaborative creative ideation. A poster describing the design and validation of the movement visualization module was presented at IEEE VR in Reutlingen, Germany on March 21, and the first full paper presenting the results of the study was just published in PLOS One.

Grocery Study

In this study, participants were asked to shop in a virtual grocery store for one week’s worth of groceries in a “food desert”. Items varied in relative health benefits as well as price, with each participant having a budget of $60.

Senior research assistant Aishwariyah Dhyan Vimal led this phase of the project.